Fuzzy Matching Algorithms

What you need to know if you're using Fuzzy Matching Algorithms

in customer data applications

“Conventional” Fuzzy Matching

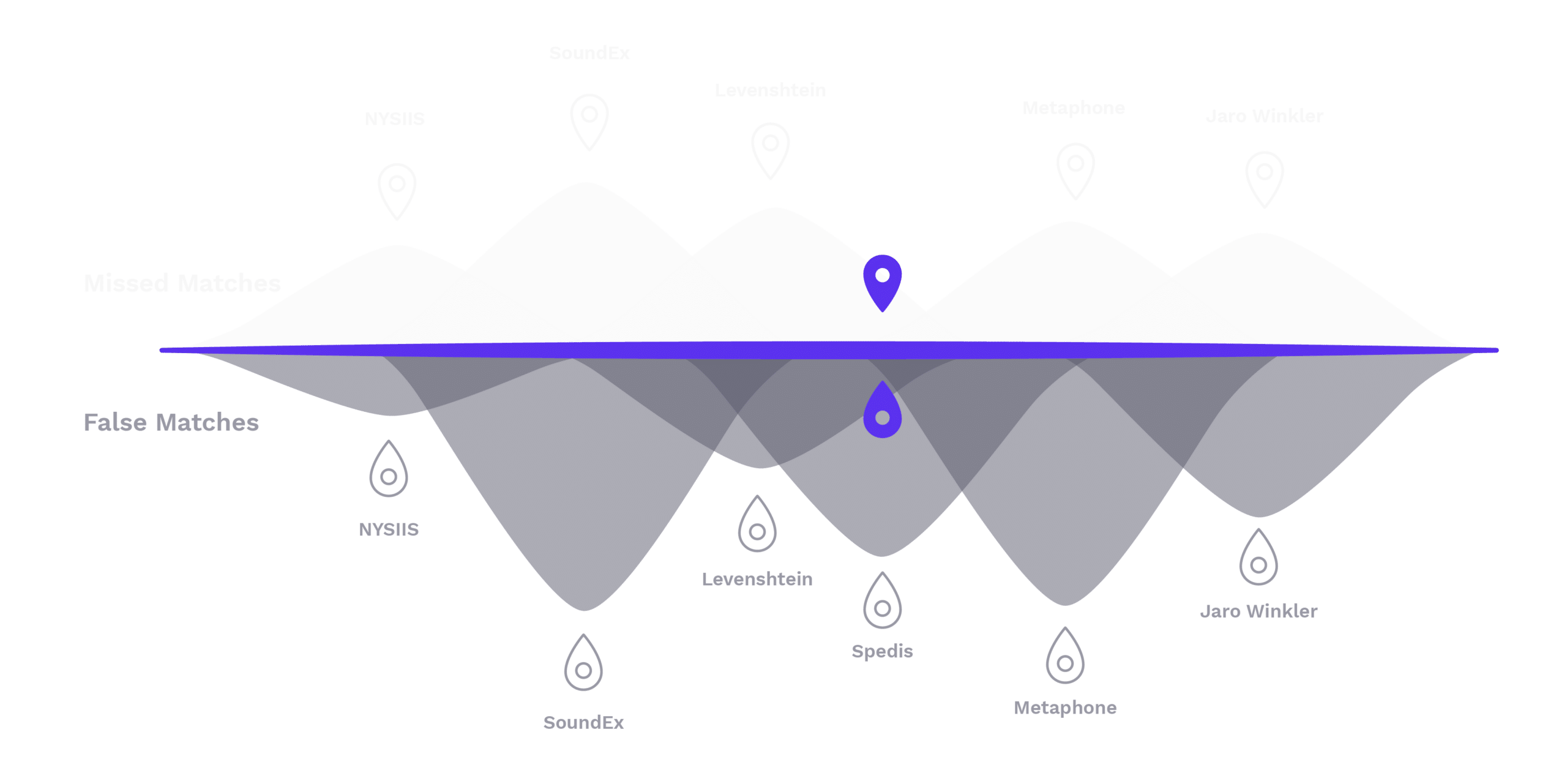

Conventional matching algorithms are specifically written and narrowly designed to solve specific patterns of difference in data.

Each algorithm generates measures for different data scenarios. It’s important to understand that the decision as to which algorithm is best is not driven by the user – it’s the data that really determines the best algorithm. It’s up to the end user to figure out which algorithm.

This process of determining the best algorithm requires an approach of build, test, analyze, tweak and repeat. When data assumptions fail for one algorithm, pick another and try again until you pick a winner.

It’s also important to recognize that any one field of data may require multiple approaches to matching. For example, a distance algorithm would detect the similarity between Thompson and Thomson, but not detect similarity between a name like Lindsey and Linzy. Both types of data defects in that field of data would require testing of different phonetic and distance algorithms applied to that same field of data.

Other data issues require approaches not addressed by conventional matching algorithms. Such as names like Chuck and Charles or the relationship in city names like New York or NYC and Brooklyn require a completely different approach.

The Fact is: Data isn’t Perfect

#DataGymnastics and #RegExHell

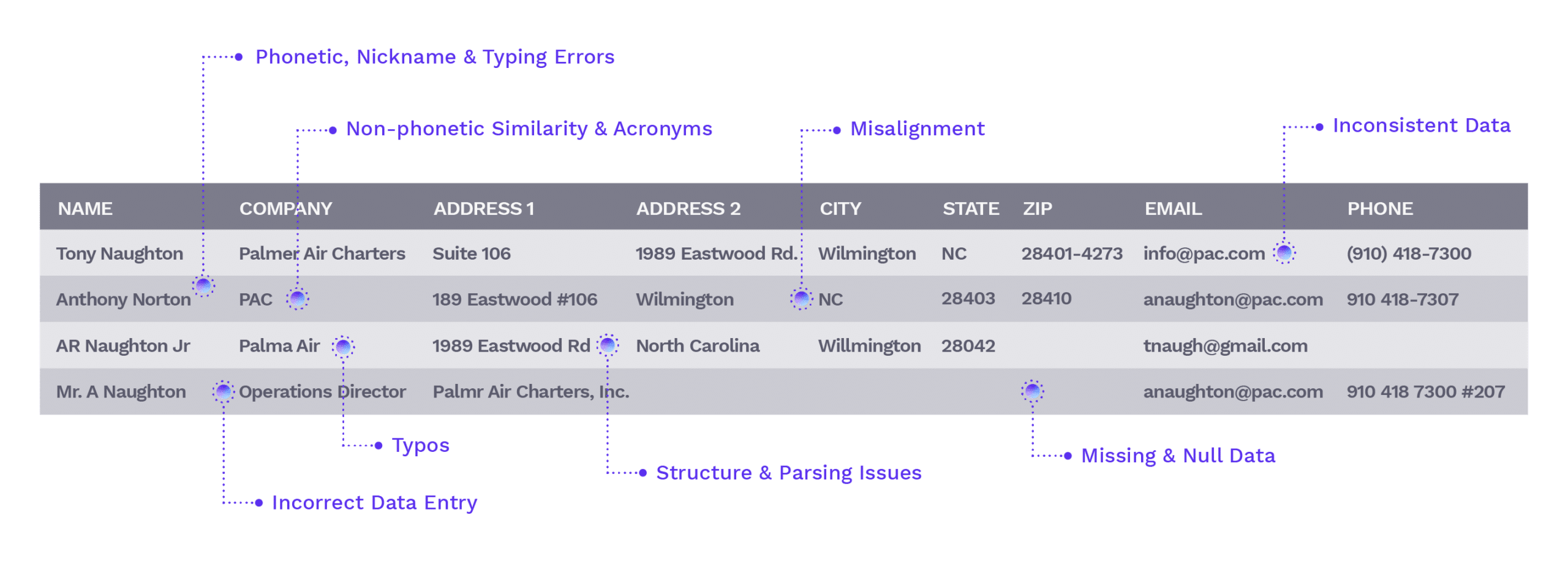

When it comes to working with fuzzy matching algorithms to match and unify customer data, it isn’t exactly easy. As a matter of fact, it’s hard That’s because there are many nuances to customer data, and as result, fuzzy matching algorithms are only part of the matching equation.

Every instance of data inaccuracy starts with the point of entry, and in every instance, the contact record was created by a human – regardless of its source. Take a moment to think about that statement. Your data and the data you acquire comes from somewhere – and the genesis is a human, with fingers on a keyboard.

Conventional matching processes use a library of algorithms like Soundex, Metaphone and Levenstein, and require significant data wrangling to extract, transform, standardize and normalize data prior to matching. The algorithms must then be folded into substring matchkeys to find potential fuzzy and phonetic matches.

It’s a long iterative process of trial and error, playing with various algorithms and matchcodes just to figure out how to get ‘adequate’ results. Customer data is unique – and the techniques required to match on it are unlike any other form of data matching.

Insanity:

"Doing the same thing over and over and expecting different results."

Why Syniti Intelligent Matching Engine?

1.

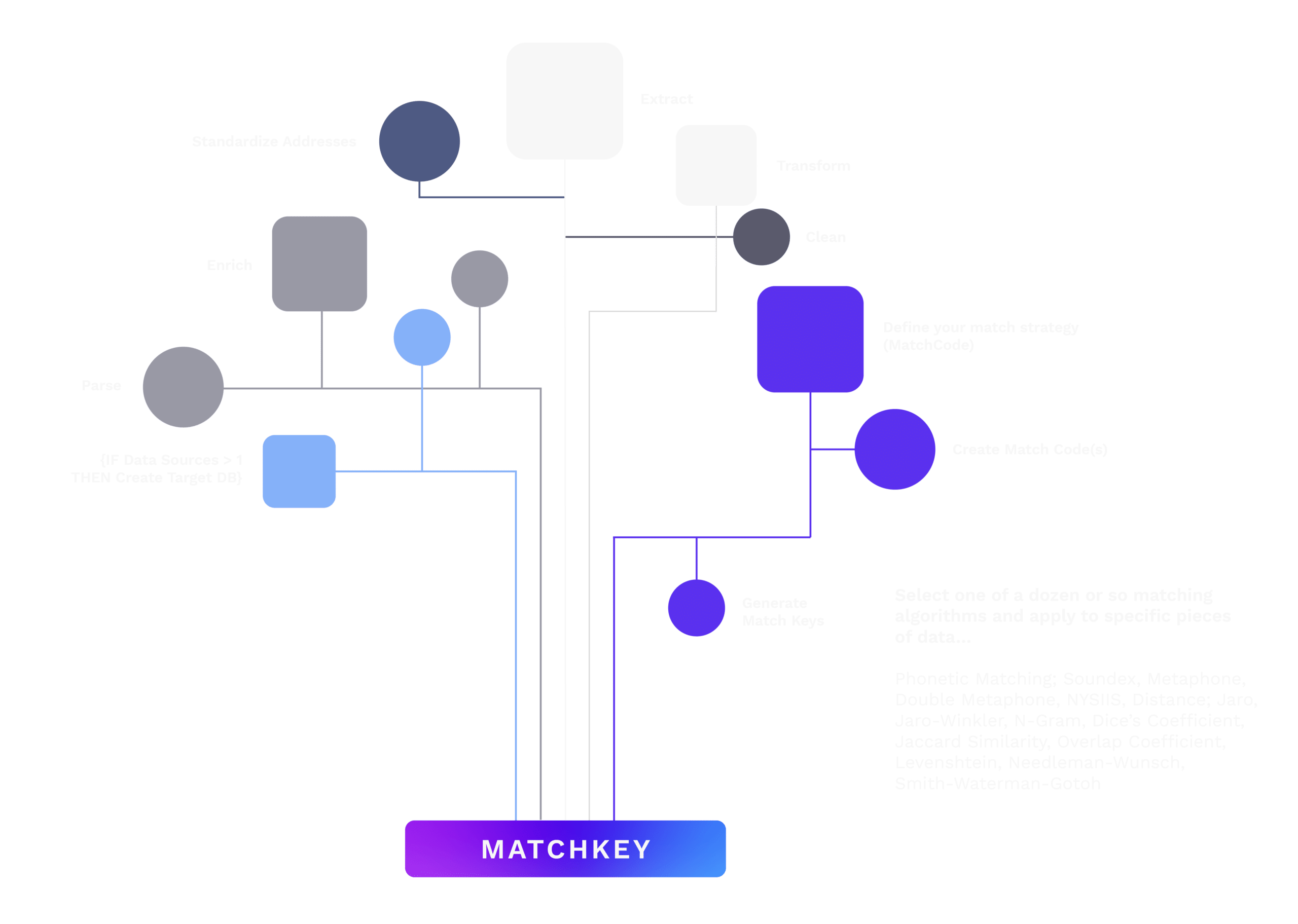

Unlike competing applications or scripted SQL queries – the Syniti Matching Engine doesn’t require data standardization, correction or manipulation prior to matching. It doesn’t require two different data sources to be normalized into a common format or a target database. It even treats addresses as an object so you can match on unstandardized address with different inputs, and even poorly structured global address data.

2.

The 360 Matching Engine matches entire records, and doesn’t rely on a single algorithm applied to a field, or extended match keys. The Matching Engine uses multiple sophisticated approaches specifically for the nuances of contact data.

3.

The Engine intelligently grades and scores matches – using all available data to confidently determine which records are a true match and which records are NOT!